Home

Recommended

Other Links

It’s been a while, but we’re back - in time for story time.

Gather round, strap in, and prepare for another depressing journey of “all we wanted to do was reproduce an N-day, and here we are with 0-days”.

Today, friends, we’re looking at SolarWinds Web Help Desk, which has seen its fair share of in-the-wild exploitation and while purporting to be a help desk solution - has had far more attention for its ability to provide RCE opportunities, with a confidence-inspiring amount of “oh it’s basica

By Aviv Donenfeld and Oded Vanunu Executive Summary Check Point Research has discovered critical vulnerabilities in Anthropic’s Claude Code that allow attackers to achieve remote code execution and steal API credentials through malicious project configurations. The vulnerabilities exploit various configuration mechanisms including Hooks, Model Context Protocol (MCP) servers, and environment variables -executing arbitrary shell commands […]

2025 winter challenge writeup

It’s been a while since I last dug into a Patch Tuesday release. With an extraordinarily high number of 177 CVEs, including 6 that were either already public or exploited in the wild, the October 2025 one seemed like a good opportunity to get back at it. The one I ended up investigating in depth was CVE-2025-59201, an elevation of privilege in the “Network Connection Status Indicator”.

Introduction Check Point Research (CPR) continuously tracks threats, following the clues that lead to major players and incidents in the threat landscape. Whether it’s high-end financially-motivated campaigns or state-sponsored activity, our focus is to figure out what the threat is, report our findings to the relevant parties, and make sure Check Point customers stay protected. […]

In this excerpt of a TrendAI Research Services vulnerability report, Nikolai Skliarenko and Yazhi Wang of the TrendAI Research team detail a recently patched command injection vulnerability in the Windows Notepad application. This bug was originally discovered by Cristian Papa and Alasdair Gorniak

A Talos researcher used targeted emulation of the Socomec DIRIS M-70 gateway’s Modbus thread to uncover six patched vulnerabilities, showcasing efficient tools and methods for IoT security testing.

CVE-2025-61982

An arbitrary code execution vulnerability exists in the Code Stream directive functionality of OpenCFD OpenFOAM 2506. A specially crafted OpenFOAM simulation file can lead to arbitrary code execution. An attacker can provide a malicious file to trigger this vulnerability.

The...

Key Points Introduction AI is rapidly becoming embedded in day-to-day enterprise workflows, inside browsers, collaboration suites, and developer tooling. As a result, AI service domains increasingly blend into normal corporate traffic, often allowed by default and rarely treated as sensitive egress. Threat actors are already capitalizing on this shift. Across the malware ecosystem, AI is […]

# Building a Secure Electron Auto-Updater

16 Feb 2026 - Posted by Michael Pastor

## Introduction

In cooperation with the Polytechnic University of Valencia and Doyensec, I spent over six months during my internship in a research that combines theoretical foundations in code signing and secure...

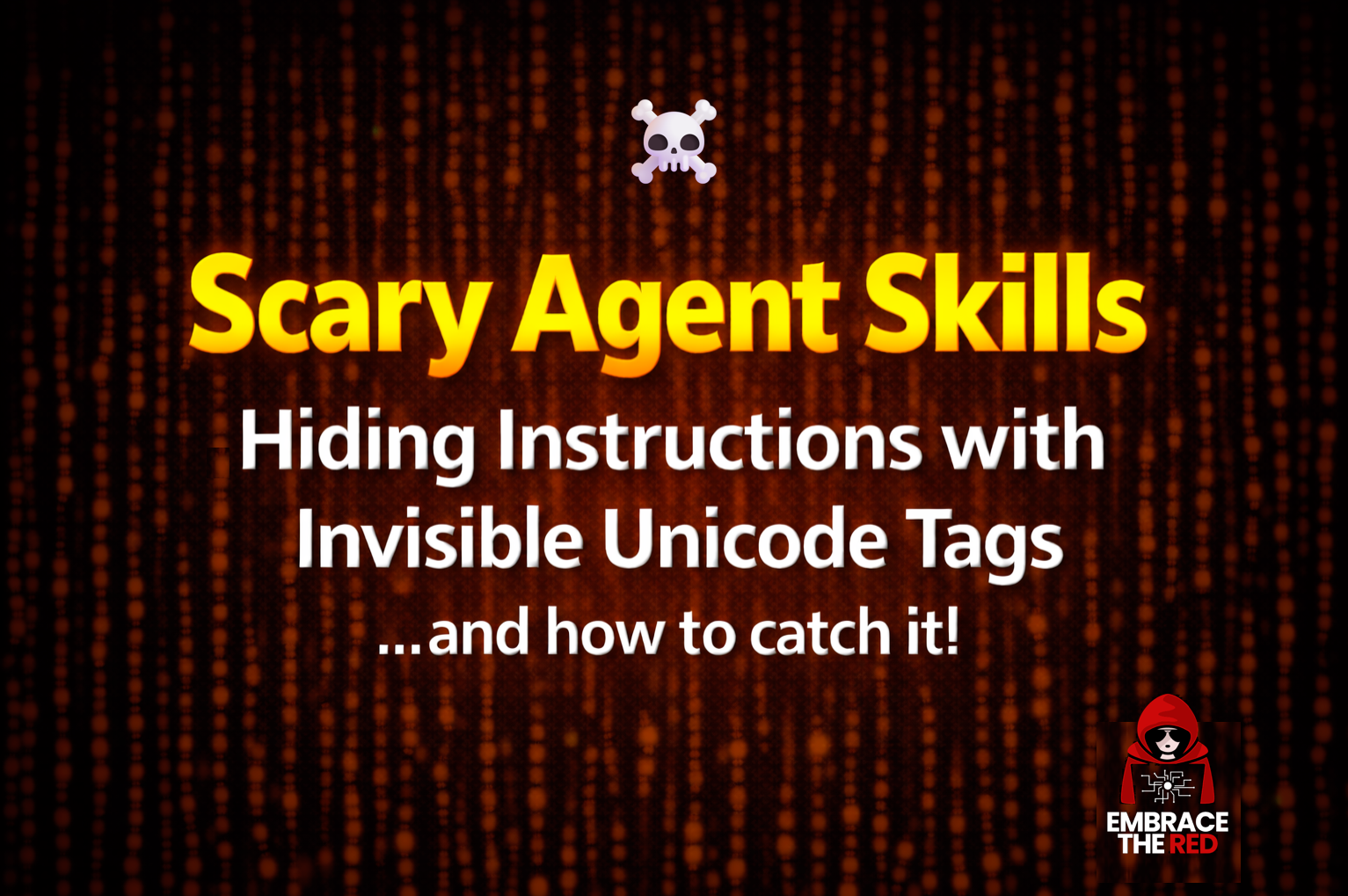

"There is a lot of talk about Skills recently, both in terms of capabilities and security concerns. However, so far I haven\u0026rsquo;t seen anyone bring up hidden …"

Oversecured has identified vulnerabilities in several popular mental health apps with tens of millions of downloads. The flaws could turn these apps into unintended data sources for surveillance, including personal conversations with AI therapists.

It’s no longer just about reverse-engineering n-days. You can detect vulnerabilities in open-source repositories before a CVE is published - or even if they’re never published. Here’s how I built an LLM workflow to detect “negative-days” and “never-days”.

In this excerpt of a TrendAI Research Services vulnerability report, Jonathan Lein and Simon Humbert of the TrendAI Research team detail a recently patched command injection vulnerability in the Arista NG Firewall. This bug was originally discovered by Gereon Huppertz and reported through the Tren

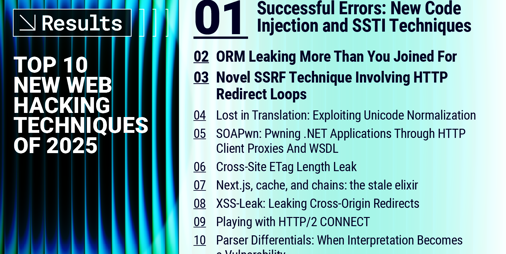

Welcome to the Top 10 Web Hacking Techniques of 2025, the 19th edition of our annual community-powered effort to identify the most innovative must-read web security research published in the last year

"Last week I saw this paper from OpenAI called \u0026ldquo;Preventing URL-Based Data Exfiltration in Language-Model Agents\u0026rdquo;, which goes into detail on new …"

TL;DR In December 2025, Cisco published https://sec.cloudapps.cisco.com/security/center/content/CiscoSecurityAdvisory/cisco-sa-sma-attack-N9bf4 addressing CVE-2025-20393, a critical vulnerability (CVSS 10.0) affecting Cisco Secure Email Gateway and Secure Email and Web Manager. The advisory was notably sparse on technical details, describing only “Improper Input Validation” (CWE-20).

We decided to dig deeper. Through reverse engineering and code analysis of AsyncOS 15.5.3, we uncovered the root cause: a single-byte integer overflow in the EUQ RPC protocol that bypasses authentication and chains into Python pickle deserialization — achieving unauthenticated remote code execution with a single HTTP request.

A friend of mine, namely Antide "xarkes" Petit, came up with a pretty good rule of thumb that I think should be elevated into a law, Antide's Law:

> If it's unclear what a cyber-security company is doing, what they're doing is pretty clear.

For example, take a look at Offensive Con 2025 and 2024...

Key Points Introduction Check Point Research has identified several campaigns targeting multiple countries in the Southeast Asian region. These related activities have been collectively categorized under the codename “Amaranth-Dragon”. The campaigns demonstrate a clear focus on government entities across the region, suggesting a motivated threat actor with a strong interest in geopolitical intelligence. The campaigns […]

Discover how Mercari, Japan's largest marketplace app, transformed their mobile security program with Oversecured, uncovering critical vulnerabilities missed by previous tools and achieving reliable automated scanning at scale.

# Auditing Outline. Firsthand lessons from comparing manual testing and AI security platforms

03 Feb 2026 - Posted by Luca Carettoni

In July 2025, we performed a brief audit of Outline - an OSS wiki similar in many ways to Notion. This activity was meant to evaluate the overall posture of the...

The Google Groups Ticket Trick vector is alive and well, allowing me to briefly verify an openssl.org email address. Also, vibe-coding security tools is easier than ever.

Beyond ACLs: Mapping Windows Privilege Escalation Paths with

When Ivanti removed the embargoes from CVE-2026-1281 and CVE-2026-1340 - pre-auth Remote Command Execution vulnerabilities in Ivanti’s Endpoint Manager Mobile (EPMM) solution - we sighed with relief.

Clearly, the universe had decided to continue mocking Secure-By-Design signers right on schedule - every January.

Welcome back to another monologue that doubles as some sort of industry-wide counseling session we must all get through together.

As we are always keen to remind everyone, today’s blo

Check Point's flagship report delivers industry leading intelligence shaping the decisions security leaders will make in 2026

[this work was conducted collaboratively by Bryan Alexander and Jordan Whitehead] This post details several vulnerabilities discovered in the popular online game Command & Conquer: Generals. We recently presented some of this work at an information security conference and this post contain

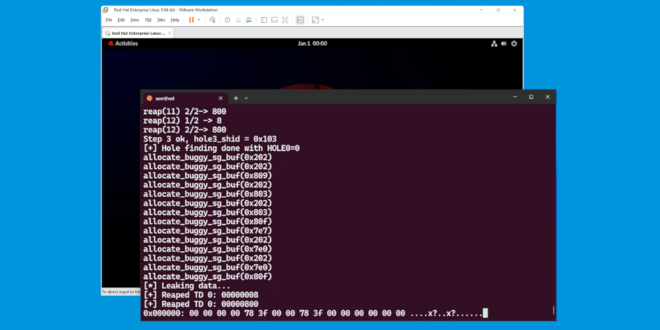

On the clock: Escaping VMware Workstation at Pwn2Own Berlin 2025

North Korea-linked threat group KONNI targets countries across APAC, specifically in blockchain sectors, with AI-generated malware

This website is using a security service to protect itself from online attacks. The action you just performed triggered the security solution. There are several actions that could trigger this block including submitting a certain word or phrase, a SQL command or malformed data.

You can email the...

Well, well, well - look what we’re back with.

You may recall that merely two weeks ago, we analyzed CVE-2025-52691 - a pre-auth RCE vulnerability in the SmarterTools SmarterMail email solution with a timeline that is typically reserved for KEV holders.

The plot of that story had everything;

* A government agency

* Vague patch notes (in our opinion)

* Fairly tense forum posts

* Accusations of in-the-wild exploitation

The sort of thing dreams are made of~

Why Are We Here?

Well, as alway

### Summary

It is still possible (albeit with significantly more effort) to upload a specially crafted Wheel file (i.e. zip) to PyPI that when installed with PIP (or another Python zipfile based t...

# Intercepting OkHttp at Runtime With Frida - A Practical Guide

22 Jan 2026 - Posted by Szymon Drosdzol

### Introduction

OkHttp is the defacto standard HTTP client library for the Android ecosystem. It is therefore crucial for a security analyst to be able to dynamically eavesdrop the traffic...

Learn how we are using the newly released GitHub Security Lab Taskflow Agent to triage categories of vulnerabilities in GitHub Actions and JavaScript projects.

VoidLink's framework marks the first evidence of fully AI-designed and built advanced malware, beginning a new era of AI-generated malware

This website is using a security service to protect itself from online attacks. The action you just performed triggered the security solution. There are several actions that could trigger this block including submitting a certain word or phrase, a SQL command or malformed data.

You can email the...

This website is using a security service to protect itself from online attacks. The action you just performed triggered the security solution. There are several actions that could trigger this block including submitting a certain word or phrase, a SQL command or malformed data.

You can email the...

This website is using a security service to protect itself from online attacks. The action you just performed triggered the security solution. There are several actions that could trigger this block including submitting a certain word or phrase, a SQL command or malformed data.

You can email the...

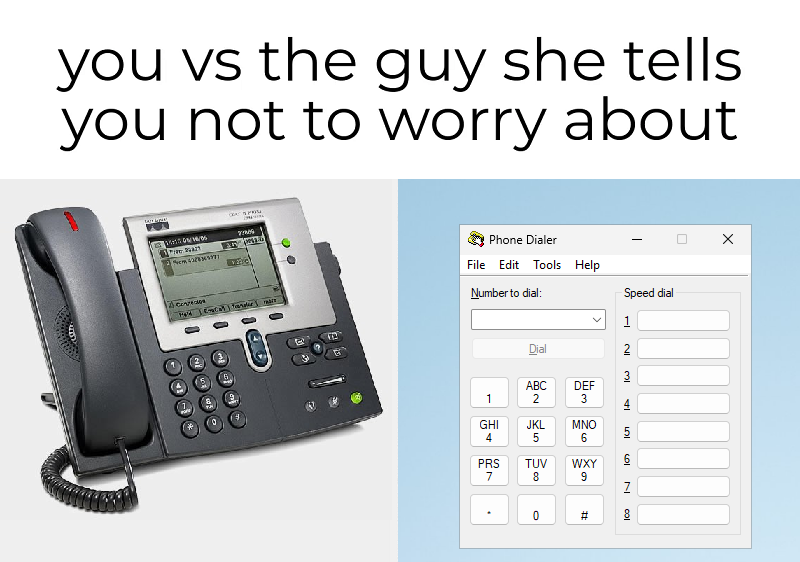

Windows has supported computer telephony integration for decades, providing applications with the ability to manage phone devices, lines, and calls. While modern deployments increasingly rely on cloud-based telephony solutions, classic telephony services remain available out of the box in Windows and continue to be used in specialized environments. As a result, legacy telephony components still […]

Recently I ran an experiment where I built agents on top of Opus 4.5 and GPT-5.2 and then challenged them to write exploits for a zeroday vulnerability in the QuickJS Javascript interpreter. I adde…

Consuming from Microsoft-Windows-Threat-Intelligence without Antimalware-PPL or kernel patching/driver loading.

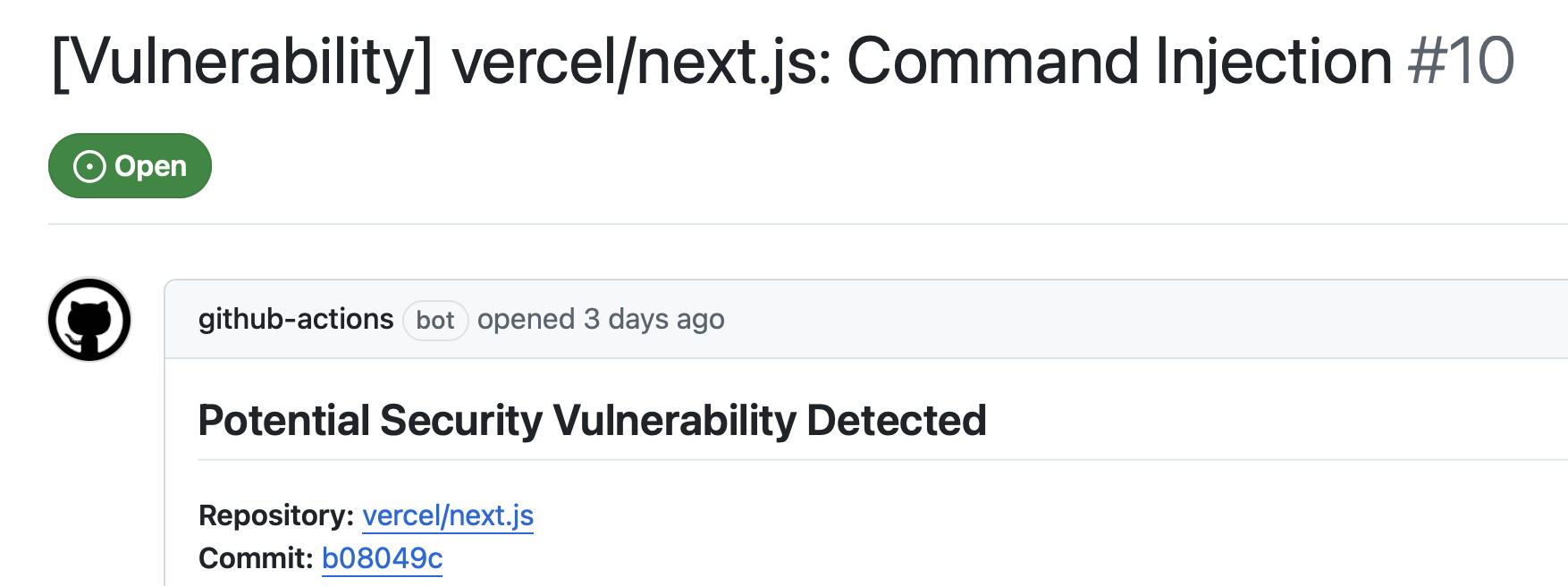

"In this post, we\u0026rsquo;ll look how an adversary can mint authentication cookies for Next.js (next-auth/Auth.js) applications to maintain persistent access to …"

This website is using a security service to protect itself from online attacks. The action you just performed triggered the security solution. There are several actions that could trigger this block including submitting a certain word or phrase, a SQL command or malformed data.

You can email the...

Announcing GitHub Security Lab Taskflow Agent, an open source and collaborative framework for security research with AI.

Key findings Introduction In December 2025, a previously unknown Ransomware-as-a-Service (RaaS) operation calling itself Sicarii began advertising its services across multiple underground platforms. The group’s name references the Sicarii, a 1st-century Jewish assassins group that opposed Roman rule in Judea. From its initial appearance, the Sicarii ransomware group distinguished itself through unusually explicit and persistent use of Israeli […]

Mobile app security has become significantly harder over the past few years. Modern mobile applications rely on dozens of third-party SDKs, complex authentication flows, background services, deeplinks, and constant interaction with device-level APIs.

Wireless-(in)Fidelity: Pentesting Wi-Fi in 2025

The new framework maintains long-term access to Linux systems while operating reliably in cloud and container environments

Welcome to 2026!

While we are all waiting for the scheduled SSLVPN ITW exploitation programming that occurs every January, we’re back from Christmas and idle hands, idle minds, yada yada.

In December, we were alerted to a vulnerability in SmarterTools’ SmarterMail solution, accompanied by an advisory from Singapore’s Cyber Security Agency (CSA) - CVE-2025-52691, a pre-auth RCE that obtained full marks (10/10) on the industry’s scale.

Vulnerabilities like these are always exciting, because whe

Eight years ago today, I started STAR Labs by hiring several fresh grads with no working experiences.

Today, I stand here with a different group of faces. Some of you were there from the beginning. Some of you joined along the way. Some of you just started last month.

And some of the people who were here… weren’t anymore.

Not because they failed. Not because we failed them. But because life called them in different directions.

Key takeaways Introduction GoBruteforcer is a botnet that turns compromised Linux servers into scanning and password brute-force nodes. It targets internet-exposed services such as phpMyAdmin web panels, MySQL and PostgreSQL databases, and FTP servers. Infected hosts are incorporated into the botnet and accept remote operator commands. Newly discovered weak credentials are used to steal data, […]